python脚本,读取命令行参数并打开txt文件

import sys

def read_file(path):

try:

with open(path,'r') as file:

content = file.read()

return content

except FileNotFoundError:

print(f"路径为{path}的文件没有找到")

except PermissionError

print(f"路径为{path}的文件无法访问")

if _name_ == "main":

if len(sys.argv) != 2:

print("错误")

else:

path = sys.argv[1]

content = read_file(path)

注意力机制

import torch

import torch.nn as nn

import numpy as np

class attention(nn.module):

def __init__(self, input_dim, output_dim):

super(attention, self).__init__() # 调用父类的构造方法来完成一些基础的初始化工作

self.input_dim = input_dim

self.output_dim = output_dim

self.W_K = nn.linear(input_dim, output_dim)

self.W_Q = nn.linear(input_dim, output_dim)

self.W_V = nn.linear(input_dim, output_dim)

self.softmax = nn.softmax(dim=-1)

def forward(self, K, Q, V):

K = self.W_K(K)

Q = self.W_Q(Q)

V = self.W_V(V)

score = torch.matmul(Q,K.transpose(-1,-2))/np.sqrt(self.input_dim)

score = self.softmax(score)

output = torch.matmul(score,V)

return output

多头注意力机制

import torch

import torch.nn as nn

import numpy as np

class muti_head_attention(nn.modul)

def _init_(self,d_model, head_num, dropout):

super(muti_head_attention, self)._init_()

self.d_model = d_model

self.head_num = head_num

self.head_dim = d_model // head_num # 整数除法运算符

self.Q = nn.Linear(d_model, d_model)

self.K = nn.Linear(d_model, d_model)

self.V = nn.Linear(d_model, d_model)

self.out = nn.Linear(d_model, d_model)

self.dropout = nn.Dropout(dropout)

def forward(self, q, k, v, mask):

q = self.Q(q).view(q.size(0),-1, self.head_num, self.head_dim) # -1自动计算序列长度

k = self.K(k).view(k.size(0),-1, self.head_num, self.head_dim)

v = self.V(v).view(v.size(0),-1, self.head_num, self.head_dim)

q = q.transpose(1,2)

k = k.transpose(1,2)

v = v.transpose(1,2)

scores = torch.matmul(q, k.transpose(-2,-1)) / np.sqrt(head_dim)

scores = scores.masked_fill(mask == 0, 1e-9)

weight = softmax(scores, dim = -1)

output = torch.matmul(weight, v)

output = output.transpose(1,2).view(q.size(0), -1, d_model)

output = self.out(output)

output = dropout(output)

return output

损失函数

import numpy as np

# MSE

def mse_loss(y_true, y_pred):

return np.mean((y_true - y_pred) ** 2)

# MAE

def mae_loss(y_true, y_pred):

return np.mean(np.abs(y_true - y_pred))

# Cross_entropy

def Cross_entropy(y_true, y_pred, epsilon=1e-12):

y_pred = np.clip(y_pred, epsilon, 1-epsilon)

return -np.mean(y_true * np.log(y_pred))

# entropy

def entropy(p,epsilon=1e-12):

p = np.clip(p, epsilon, 1-epsilon)

return -np.mean(p * np.log(p))

# binary cross_entropy

def binary_cross_entropy(y_true, y_pred, epsilon):

y_pred = np.clip(y_pred, epsilon, 1-epsilon)

return -np.mean(y_true * np.log(y_pred) + (1-y_true) * np.log(1-y_pred))

# KL_divergence

def KL_divergence(y_true, y_pred, epsilon):

y_true = np.clip(y_true, epsilon, 1-epsilon)

y_pred = np.clip(y_pred, epsilon, 1-epsilon)

return np.mean(y_true * np.log(y_true / y_pred))

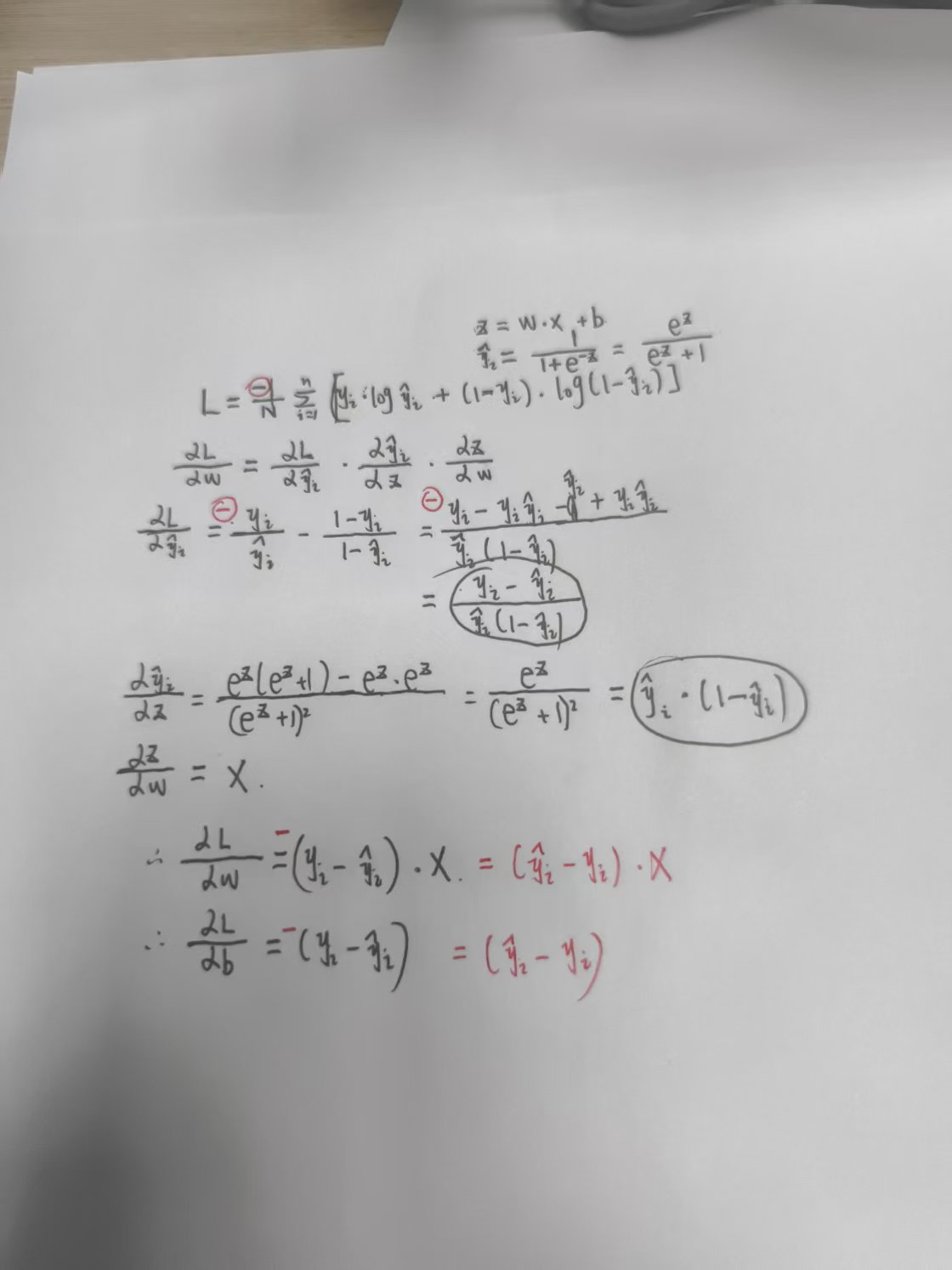

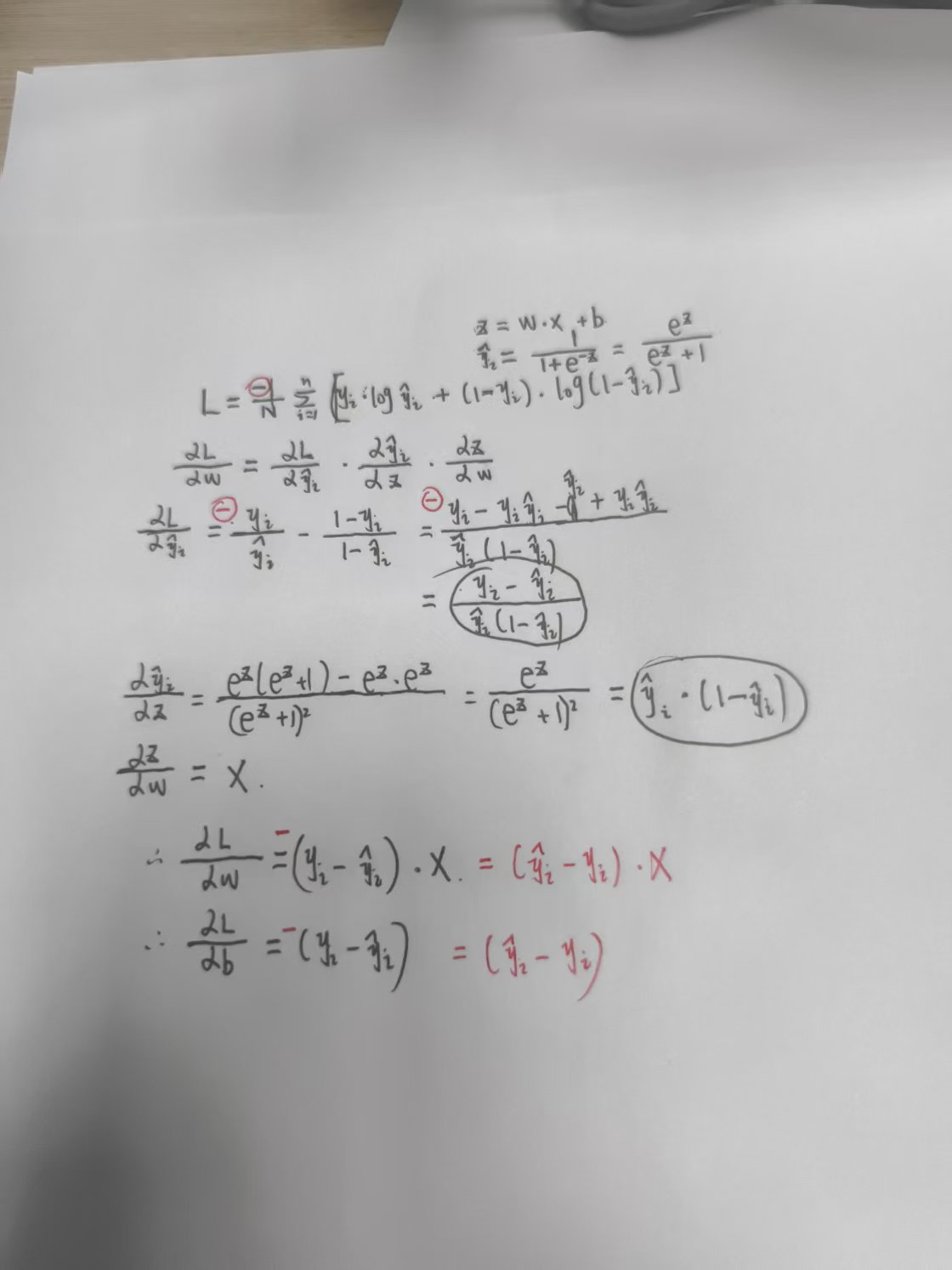

Logistic_regression

import torch

import torch.nn as nn

class logistic_regression(nn.module):

def _init_(self, input_dim):

super(logistic_regression, self)._init_()

self.input_dim = input_dim

self.w = nn.linear(input_dim, 1)

self.sigmoid = nn.sigmoid()

def forward(self, x):

x = self.w(x)

x = self.sigmoid(x)

return x

Logistic_regression(不用pytorch)

import Numpy as np

class logistic_regression:

def _init_(self, lr, iteration) :

self.lr = lr

self.iteration = iteration

self.w = None

self.b = None

def My_sigmoid(x)

return 1/(1+np.exp(-x))

def train(self, X, y)

batch_size, input_dim = X.shape

self.w = np.zero(input_dim)

self.b = 0

for _ in range(self.iteration):

O = np.dot(X, self.w) + self.b

y_pre = self.My_sigmoid(O)

d_w = (1/self.iteration) * np.dot(X.T, (y_pre - y_)) #点积里面已经包含加法了

d_b = (1/self.iteration) * np.sum(y_pre - y)

self.w = self.w - self.lr * d_w

self.b = self.w - self.lr * d_b

位置编码